WoundKare: An Augmented Reality-Based Intelligent Wound Management System Using DeepSkin Analytics

INTRODUCTION

Chronic wound management in elderly patients presents a significant challenge for the health care system globally. With high phenomena of increased air -medicine population and older people, with high phenomena of ulcers, diabetic leg ulcers and venous foot ulcers, there is an increasing demand for reliable, effective and scalable solutions that help doctors in timely evaluation and monitoring of wounds. Traditional perspectives include manual inspection, photographic documentation and subjective estimates of wound progression, which often lead to deviations in diagnosis and treatment efficiency.

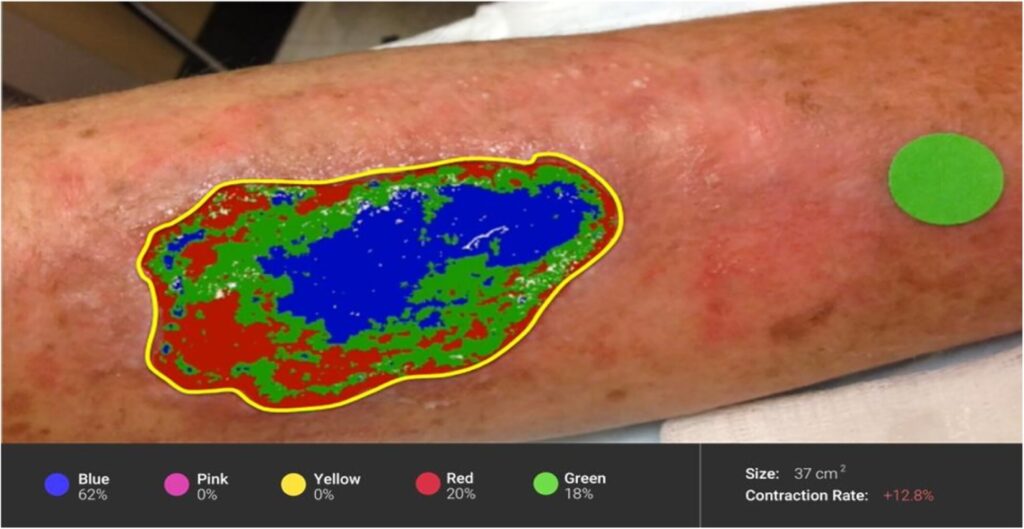

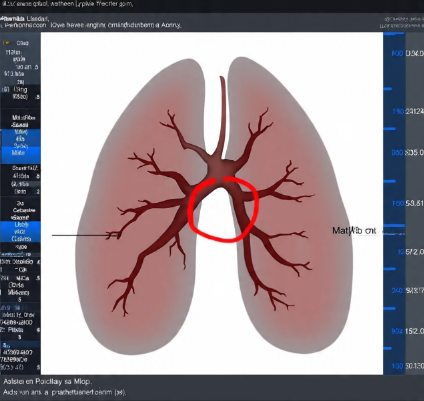

- Figure 1: Clinical Interface of patients for Wound detection and its tissue classification

To address these intervals, we introduce an advanced computer vision, deep learning and augmented reality technologies and introduce a smart and interactive wound assessment system. The original Deepskin from this system benefits from the algorithm-a solid nervous network model medical image segmentation and fine tuning for tissue classification [6] [7]. Using this model, Woundkare identifies the wound area from entrance images, rejects tissues and classifies them into four clinically recognized types: necrotic, slow, epithelizing and granular. In addition, the app calculates geometric wound functions, including length, width, area and estimated volume – matrix is important for monitoring treatment progression.

The integration of AR in WoundKare further allows clinicians and caregivers to visualize wound changes over time with intuitive overlays, enhancing understanding and engagement [1] [2]. The system is particularly designed for aged care environments, where frequent wound assessments by specialists may not be viable, and where care staff benefits from digital support tools for decision-making. By automating and standardizing wound documentation and analysis, WoundKare aims to not only reduce clinical workload but also improve patient outcomes through early and accurate detection of wound deterioration or improvement.

System Architecture

The WoundKare device accommodates 5 center components:

User Interface Layer:

Built as a go-platform mobile application, the interface permits customers (sufferers or caregivers) to seize wound photos the use of a phone or tablet. It consists of functionalities for uploading photographs, viewing historical facts, and receiving visual analytics and hints.

- Figure 2: Mobile app for patient’s data entry for Wound detection

Image Preprocessing Module:

This module ensures regular input to the DeepSkin model by using making use of filters for brightness normalization, noise reduction, and assessment enhancement. Edge-retaining smoothing techniques are used to maintain wound boundary clarity.

DeepSkin Wound Analysis Engine:

The backbone of the device, this deep gaining knowledge of engine performs wound boundary detection, tissue segmentation, classification, and measurement estimation. It returns processed snap shots with overlaid wound zones and measurements [2].

Augmented Reality Visualizer:

Using the tool’s camera and sensors, the AR module overlays real-time statistics together with wound progression markers, size courses, and restoration status on the patient’s skin surface.

Backend & Cloud Storage:

Patient records, wound history, and analysis data are securely stored in the cloud, supporting longitudinal tracking and remote clinician access. Data privacy is ensured using encryption and secure access protocols (HIPAA/GDPR compliance).

You can download the Project files here: Download files now. (You must be logged in).

DeepSkin Model and Training Methodology

The DeepSkin model is a custom implementation of a U-Net architecture optimized for semantic segmentation of wound images. The training pipeline includes the following stages:

Dataset Preparation:

The model is trained on a diverse wound image dataset annotated by clinicians, including various wound types and tissue classifications [3]. The dataset includes thousands of annotated samples labeled pixel-wise into Necrotic, Slough, Epithelializing, and Granulating tissues.

Preprocessing:

Data augmentation techniques such as rotation, flipping, brightness adjustment, and Gaussian noise were applied to increase robustness against variations in wound presentation and lighting.

Model Architecture:

The U-Net model consists of an encoder-decoder structure with skip connections, enabling accurate localization while preserving spatial resolution. Batch normalization and dropout layers are used to enhance generalization.

Loss Function & Optimization:

A weighted categorical cross-entropy loss function is used to handle class imbalance, along with the Dice coefficient to emphasize segmentation accuracy. The Adam optimizer is used with an adaptive learning rate scheduler.

Validation:

Performance is evaluated on a held-out test set using metrics like Intersection-over-Union (IoU), F1-score, and pixel accuracy. Cross-validation ensures robustness across diverse wound appearances.

You can download the Project files here: Download files now. (You must be logged in).

AR Integration and User Interface

The AR module in WoundKare uses ARCore/ARKit frameworks, depending on the device platform, to enable real-time wound visualization and measurement [5]. Key features include:

Live Wound Overlay:

Once the wound is detected and segmented by DeepSkin, the app uses AR to superimpose healing progress indicators (change in wound area) directly over the skin via the device’s camera.

Measurement Tools:

Virtual rulers and markers appear in real-time, allowing clinicians to verify wound dimensions visually. This improves confidence in AI-calculated measurements [4].

Interactive Interface:

Users can select time points to view previous wound states, with visual comparisons enabled through AR overlays showing progress or regression.

Accessibility:

The UI is designed for elderly users and non-expert caregivers, with large fonts, voice prompts, and visual cues to facilitate usability in real-world clinical settings.

Evaluation and Results

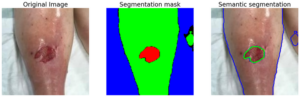

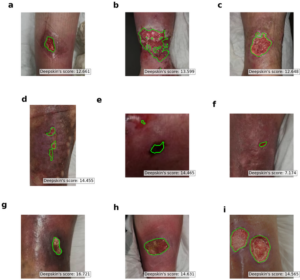

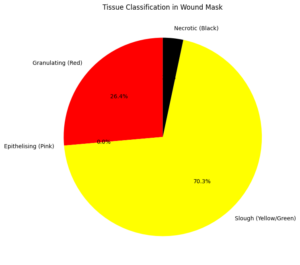

WoundKare was evaluated in a clinical simulation setting and with anonymized historical wound images. Key results include:

- Figure 3: Wound detection output image using deepskin Python model

- Figure 4: Wound detection with its Segmentation mask and Semantic Segmentation using Python based deepskin algorithm

- Figure 5: Wound detection multiple output images using deepskin Python model

- Figure 6: Tissue classification of Wound detection images based on wound condition.

Tissue Classification Accuracy:

- Necrotic: 89.2%

- Slough: 85.6%

- Granulating: 91.4%

- Epithelising: 87.9%

Measurement Accuracy:

Compared with manual measurements, the app showed an error margin of less than 5% in wound length, area, and volume estimations.

User Satisfaction:

Usability tests with 20 caregivers in aged care homes reported high satisfaction, with 92% stating the app improved confidence in wound monitoring.

Inference Time:

The average processing time per image (on-device) was under 3 seconds, enabling near real-time analysis.

You can download the Project files here: Download files now. (You must be logged in).

Clinical Relevance and Future Scope

WoundKare addresses critical gaps in wound care by offering an objective, repeatable, and automated assessment mechanism tailored to aged care [5]. It reduces dependence on clinical specialists for routine measurements and supports early intervention by identifying deteriorating wounds faster.

Future directions include:

- Integration with EHRs: Seamless linkage with electronic health records for comprehensive patient tracking.

- 3D Wound Modeling: Enhancing the volume estimation accuracy using stereo imaging or LiDAR-based scanning.

- Telemedicine Support: Enabling remote specialist consultation using shared AR-based wound visualizations.

- Progress Prediction Models: Leveraging machine learning to predict healing trajectories and recommend interventions.

References

- Ronneberger, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention (MICCAI).

- Wang, L., Pedersen, P. C., Strong, D. M., Tulu, B., Agu, E., & Ignotz, R. (2015). Wound image analysis system for diabetics. IEEE Transactions on Biomedical Engineering, 62(2), 479–488.

- Goyal, M., Reeves, N. D., Rajbhandari, S., Spragg, J., & Yap, M. H. (2017). Fully convolutional networks for diabetic foot ulcer segmentation. In IEEE EMBC.

- Apple ARKit & Google ARCore Developer Guides (2023).

- Alahakoon, D., & Bandara, Y. (2021). A review of AR in healthcare applications. Journal of Biomedical Informatics, 117, 103746.

- Attia, R., Hassan, A., El-Masry, N., & Fouad, M. (2020). A deep learning approach for chronic wound segmentation and classification using smartphone images. Journal of Biomedical Informatics, 108, 103514. https://doi.org/10.1016/j.jbi.2020.103514

- Yousuf, A., Khan, M. A., & Tariq, U. (2021). A novel ensemble deep learning framework for classification of wound tissues. Computers in Biology and Medicine, 136, 104705. https://doi.org/10.1016/j.compbiomed.2021.104705

- Lu, Y., Yang, C., & Lin, H. (2023). Application of Augmented Reality in Mobile Health: A Systematic Review. JMIR mHealth and uHealth, 11(1), e39705. https://doi.org/10.2196/39705

You can download the Project files here: Download files now. (You must be logged in).

Responses