AI Debugging: Faster, Safer Code for Students & Teams

AI Debugging: Faster, Safer Code for Students & Teams

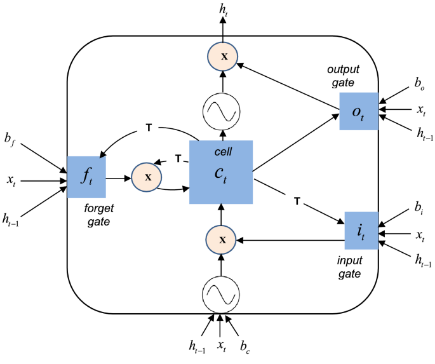

AI debugging is a cohesive, end‑to‑end workflow that blends static analysis, LLM-driven repair, and fuzzing to ship faster, safer code. Static analysis provides early, compile‑time feedback by detecting bugs, security issues, and code smells before runtime; fuzzing probes edge cases and robustness gaps through automated, coverage‑guided inputs; and large‑language‑model repair suggests targeted patches (often as diffs) and companion tests to validate fixes. These capabilities are embedded directly in your IDE—with inline diagnostics, explanations, and one‑click quick‑fixes and enforced in CI/CD via quality gates, policy checks, and regression tests that prevent unsafe changes from merging. To accelerate adoption and learning, the system includes visual dashboards for trends and hot spots, sample code and patterns that demonstrate fixes in context, and references to standards (e.g., CWE, OWASP, and project‑specific guidelines). Wrapped in secure SDLC practices versioned artifacts, audit trails of applied fixes, dependency and SCA scans, and privacy‑aware telemetry this integrated approach reduces mean time to repair, cuts late‑stage defects, improves developer and student learning, and measurably hardens production systems.

1. Introduction

Debugging consumes a large share of developer and student time. AI-assisted workflows change the economics by reducing time-to-fix and surfacing issues earlier in the lifecycle. This document presents a practical, standards-aware approach that blends coding assistants, static analysis, fuzzing, and automated program repair (APR) into a cohesive workflow.

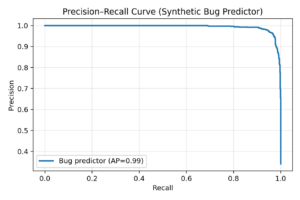

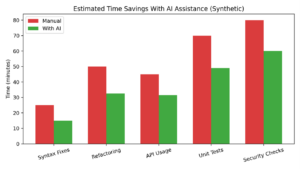

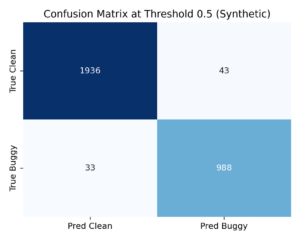

We keep the focus on clear, reproducible techniques: three technical visuals illustrate model behavior and expected time savings; a compact Python AST checker shows how to codify bug patterns; and a 90-day rollout plan helps you adopt these capabilities safely in classrooms and production teams.

2. The Traditional Debugging Challenge

- Fragmented tooling and context switching delay feedback and raise cognitive load.

- Late discovery of defects increases rework and risk especially for security issues.

- Manual reviews are inconsistent at spotting well-known bug patterns without guardrails.

3. An AI-Powered Debugging Toolkit

LLM coding assistants: Draft code/tests, explain errors, and accelerate boilerplate with human review.

Static analysis (SAST) + quality gates: Detect bug patterns, code smells, and vulnerabilities in IDE and CI to stop issues early.

Automated Program Repair (APR): Propose patches validated by tests; human approval ensures correctness.

Fuzzing: Continuously explore edge cases to discover crashes and vulnerabilities beyond unit tests.

Figure 1. Precision–Recall curve for a synthetic bug predictor (AP shown).

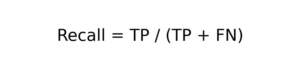

Figure 2. Estimated developer time savings with AI assistance (synthetic).

Figure 3. Confusion matrix at threshold 0.5 (synthetic).

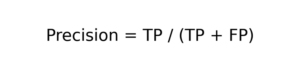

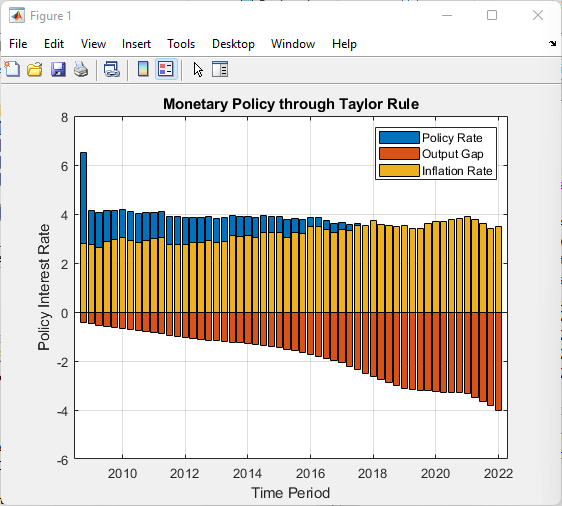

4. Measuring Quality: Core Metrics & Formulae

Use these metrics to tune thresholds and triage policies in AI-assisted debugging:

Precision

Recall

5. Static Bug Pattern Checks (Python AST Example)

# ai_debug_checks.py

import ast

from pathlib import Path

class Issue:

def __init__(self, path, lineno, rule, msg):

self.path, self.lineno, self.rule, self.msg = path, lineno, rule, msg

def __str__(self):

return f”{self.path}:{self.lineno} [{self.rule}] {self.msg}”

class MutableDefaultChecker(ast.NodeVisitor):

def __init__(self, path): self.path, self.issues = path, []

def visit_FunctionDef(self, node):

for arg, default in zip(node.args.args[-len(node.args.defaults):], node.args.defaults):

if isinstance(default, (ast.List, ast.Dict, ast.Set)):

self.issues.append(Issue(self.path, node.lineno, ‘PY001’,

f”Mutable default for parameter ‘{arg.arg}’. Use None + init.”))

self.generic_visit(node)

class SqlConcatChecker(ast.NodeVisitor):

DANGEROUS_FUNCS = {‘execute’, ‘executemany’}

def __init__(self, path): self.path, self.issues = path, []

def visit_Call(self, node):

func_name = getattr(node.func, ‘attr’, None)

if func_name in self.DANGEROUS_FUNCS:

if node.args and isinstance(node.args[0], ast.BinOp) and isinstance(node.args[0].op, ast.Add):

self.issues.append(Issue(self.path, node.lineno, ‘SEC001’,

‘Possible SQL concat in execute(); use parameters.’))

self.generic_visit(node)

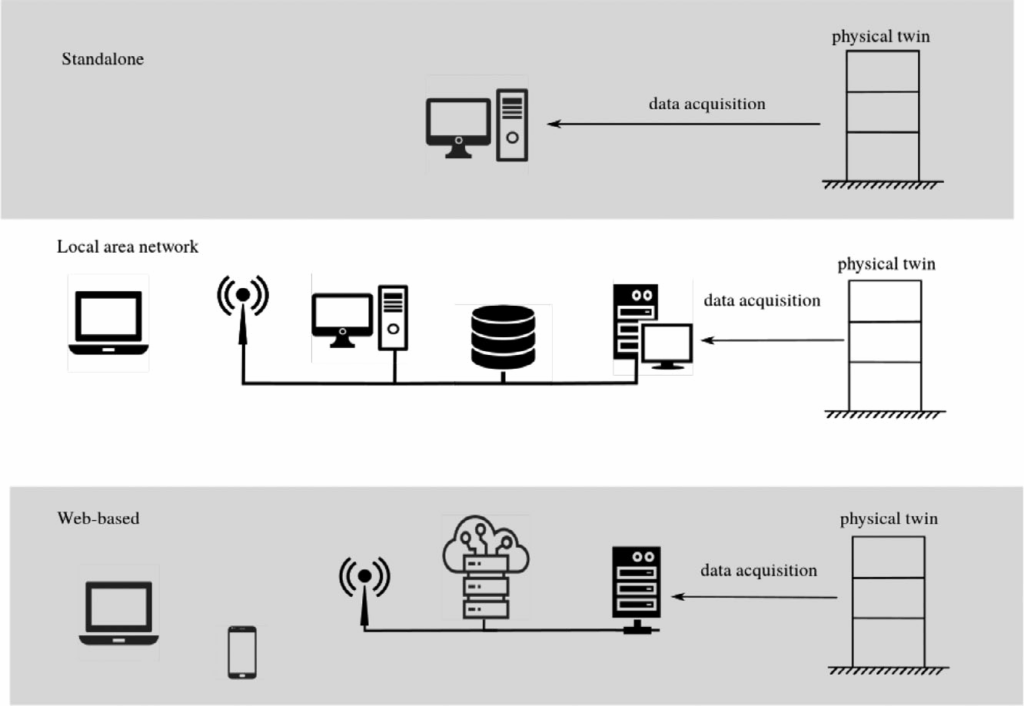

6. A 90-Day Rollout Plan (Teams & Classrooms)

Weeks 1–2: Baseline & policy — Measure cycle time and defect leakage; approve an enterprise AI assistant; document data-handling rules.

Weeks 3–6: Shift left — Enable IDE analysis and CI quality gates; add fuzzing to high-risk components.

Weeks 7–10: APR pilot — Let APR/LLM propose test-validated patches for failing PRs; require human approval.

Weeks 11–12: Review & scale — Compare KPIs with baseline; scale to more repos and update secure coding standards.

7. Risks & Mitigations

- Insecure or incorrect AI suggestions → Gate with tests, SAST/DAST, and human review.

- Secret leakage → Use enterprise assistants with strong privacy controls; restrict data scopes.

- Alert fatigue → Calibrate thresholds; route only high-confidence issues to blocking gates.

- Coverage gaps → Add fuzzing/property tests to complement unit tests.

8. Conclusion

AI debugging should be viewed as a comprehensive, iterative workflow rather than a single tool or step in the development process. It brings together multiple complementary techniques, each serving a distinct purpose: intelligent assistants accelerate problem-solving and provide contextual guidance; static analysis tools deliver early feedback by detecting potential issues before runtime; fuzzing introduces depth by uncovering edge cases and hidden vulnerabilities through randomized testing; and automated program repair (APR) enables rapid, targeted fixes to identified defects. All these methods operate within the framework of secure software development lifecycle (SDLC) practices, ensuring that security and compliance remain integral throughout the process. When applied collectively, this approach not only reduces debugging cycle time and improves overall software quality but also enhances the learning experience for students and significantly hardens production systems against failures and security risks.

9. References

- Peng et al. The Impact of AI on Developer Productivity: Evidence from GitHub Copilot (arXiv:2302.06590) — https://arxiv.org/abs/2302.06590

- GitHub + Accenture enterprise RCT on Copilot (2024) — https://github.blog/news-insights/research/research-quantifying-github-copilots-impact-in-the-enterprise-with-accenture/

- Google Tricorder: Building a Program Analysis Ecosystem (ICSE 2015) — https://research.google/pubs/tricorder-building-a-program-analysis-ecosystem/

- SonarSource: Measuring & identifying code-level technical debt — https://www.sonarsource.com/learn/measuring-and-identifying-code-level-technical-debt-a-practical-guide/

- Meta Engineering: SapFix (2018) — https://engineering.fb.com/2018/09/13/developer-tools/finding-and-fixing-software-bugs-automatically-with-sapfix-and-sapienz/

- OSS-Fuzz (project overview) — https://github.com/google/oss-fuzz

- OWASP Top 10 (2021) – A03: Injection — https://owasp.org/Top10/A03_2021-Injection/

- NIST Secure Software Development Framework SP 800-218 — https://csrc.nist.gov/pubs/sp/800/218/final

Responses